This article explores the current state of AI development, focusing on the limitations of current large language models (LLMs) and the need for a more sophisticated approach inspired by the human mind. Drawing on insights from renowned computer scientist Yann LeCun, it highlights the importance of persistent memory, context understanding, and a 'society of mind' architecture for truly intelligent AI.

Ask ten people how large language models work, and you’ll get ten answers. Or maybe you’ll only really get two or three answers: the rest of your respondents will respond with some version of “don’t know, don’t care” or lament a lack of general understanding of the “guts” of these pervasive systems. It’s rare that we’ve encountered such ubiquitous technology, while recognizing that the majority of people don’t really know how it works.

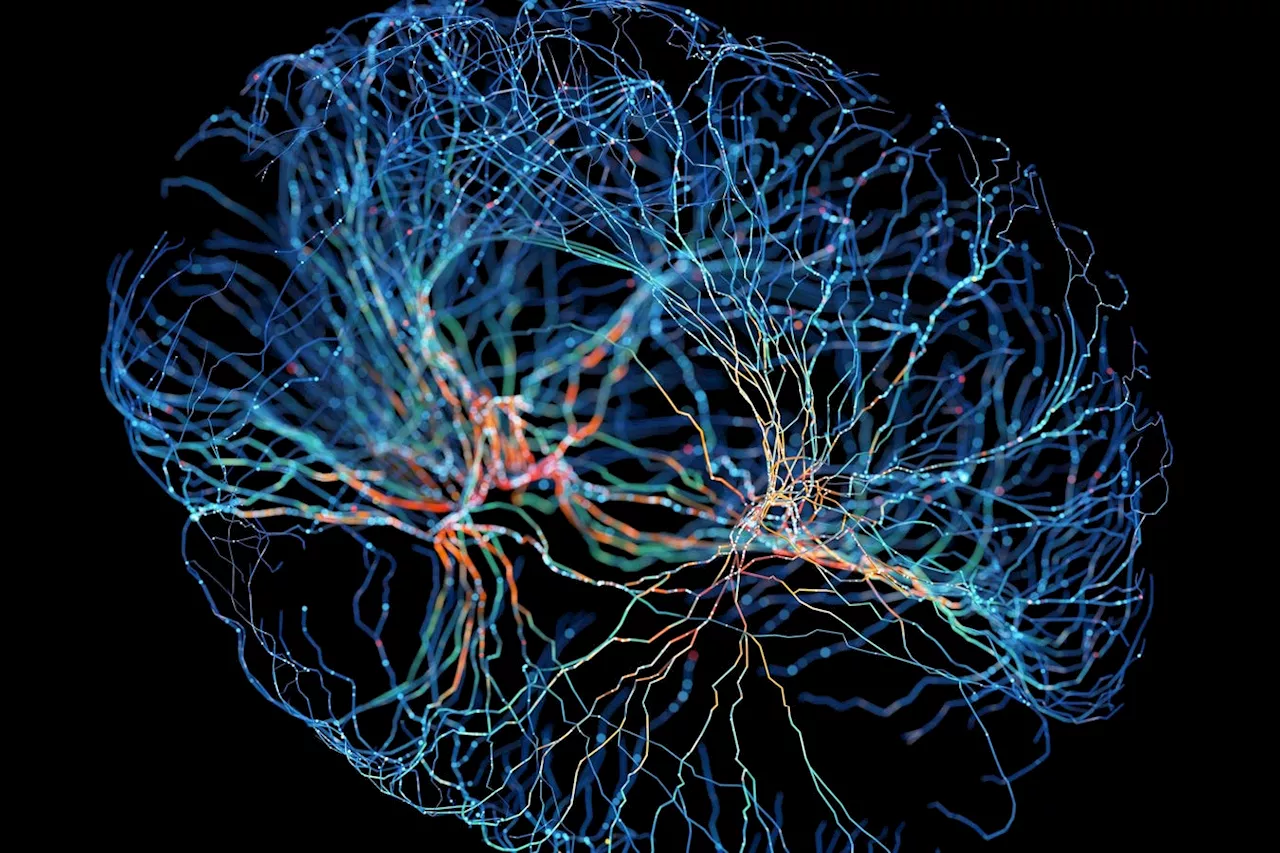

To be fair, though, the stage was set already for this kind of knowledge gap in the tech world. Not too many people felt like explaining what the cloud was 10 or 15 years ago, and when it comes to crypto, most people are still averse to trying to describe the blockchain in any great detail. With AI, though, it’s different. The stakes are different – the impact on our society and our personal lives is different. So it helps to know a little more about how AI agents, LLMs and neural nets, are making decisions and processing what’s around them. I’m going to go into a few basic elements of what I’ve heard people talking about using some specific quotes from renowned computer scientist Yann LeCun that I got from a recent event. LeCun had some very important things to say about the real state of AI development, and what it means for our world. Here’s the first major idea that people close to the industry have been presenting to audiences for the last few years. In a sense, it’s a disclaimer about the rapid emergence of AI as a sort of human surrogate. It’s a qualifier for our general sense that there are no roadblocks to AI getting more and more human over time. If you look at the result of big new models like OpenAI o1, or the newest one from DeepSeek, you might think that AI can leapfrog over the bar of human intelligence, just by adding power and tokens. But according to some of the best theorists, that would be wrong, because rather than being linear, true intelligence is a collection of systems working together to produce a very precise and robust result. LeCun suggests that these systems need a “persistent memory” to be powerful. And that’s something that experts have been telling us again and again as they work on newer AI models. You can talk about this in terms of memory, or in terms of context – for example, in the context windows that the systems use to perceive what they’re working on. You can sort of map these two types of memory to the two different types of human memory that make up our overall cognitive operations – short-term memory and long-term memory. The parametric memory would be the long-term memory – the historic context and knowledge base that undergirds the long-term thinking of the machine. The working memory would be the short-term memory – and this would basically correspond to a context window and context clues that the machine learns to think in real time. If, as Minsky and LeCun point out, the human mind is more elaborate than a single supercomputer, what’s the takeaway? “We need a completely new architecture,” LeCun says. “It's not going to happen with LLMs. It's not going to happen with anything we've done so far. We need … to have common sense, … if you give a standard puzzle to an LLM, it will just regurgitate the answer.” One thing he suggest is the emergence of something called “world models” where AI starts to build the context that it needs to progress, to become more cognitively like a human being.

ARTIFICIAL INTELLIGENCE LARGE LANGUAGE MODELS AI DEVELOPMENT YANN LECUN COMMON SENSE WORLD MODELS MEMORY CONTEXT

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Beyond Instant Ramen: Exploring the Rich History and Complexity of This Japanese DishThis article delves into the history and essence of ramen, dispelling the common perception of it as solely instant noodles. It traces its origins back to 14th-century Japan, highlighting its evolution from udon noodles with ham-based broth to the elaborate and flavorful dish we know today.

Beyond Instant Ramen: Exploring the Rich History and Complexity of This Japanese DishThis article delves into the history and essence of ramen, dispelling the common perception of it as solely instant noodles. It traces its origins back to 14th-century Japan, highlighting its evolution from udon noodles with ham-based broth to the elaborate and flavorful dish we know today.

Read more »

LLMs: Unraveling the Mysteries of the Human Brain in 2025Large language models (LLMs) are increasingly being used to study the complexities of the human brain. From understanding speech and language processing to identifying patterns in biological data, LLMs are poised to accelerate advancements in various fields like AI, robotics, and neurotechnology. 2025 is expected to see even more exploration of conversational AI and the use of LLMs to analyze data from brain imaging technologies like fMRI, MEG, and EEG.

LLMs: Unraveling the Mysteries of the Human Brain in 2025Large language models (LLMs) are increasingly being used to study the complexities of the human brain. From understanding speech and language processing to identifying patterns in biological data, LLMs are poised to accelerate advancements in various fields like AI, robotics, and neurotechnology. 2025 is expected to see even more exploration of conversational AI and the use of LLMs to analyze data from brain imaging technologies like fMRI, MEG, and EEG.

Read more »

Understanding Weight Loss: Beyond BMIThis article explores the multifaceted aspects of weight loss, emphasizing the limitations of BMI as a sole indicator of health. It highlights the importance of considering other factors like total daily energy expenditure (TDEE) and basal metabolic rate (BMR) for a comprehensive understanding of individual needs.

Understanding Weight Loss: Beyond BMIThis article explores the multifaceted aspects of weight loss, emphasizing the limitations of BMI as a sole indicator of health. It highlights the importance of considering other factors like total daily energy expenditure (TDEE) and basal metabolic rate (BMR) for a comprehensive understanding of individual needs.

Read more »

The Cognitive Intimacy of Interacting with LLMsThis article explores the unique and evolving relationship between humans and LLMs, describing the dynamic and insightful nature of their interactions as 'cognitive intimacy'. While acknowledging the artificiality of this relationship, the author argues that it offers valuable insights into our own thought processes and encourages deeper reflection.

The Cognitive Intimacy of Interacting with LLMsThis article explores the unique and evolving relationship between humans and LLMs, describing the dynamic and insightful nature of their interactions as 'cognitive intimacy'. While acknowledging the artificiality of this relationship, the author argues that it offers valuable insights into our own thought processes and encourages deeper reflection.

Read more »

LLMs Transform Supply Chain OptimizationThis article explores how large language models (LLMs) are revolutionizing supply chain management by automating data analysis, insight generation, and scenario planning. Drawing on Microsoft's cloud business experience, the authors demonstrate the potential of LLMs to significantly reduce decision-making time and enhance productivity for business planners and executives.

LLMs Transform Supply Chain OptimizationThis article explores how large language models (LLMs) are revolutionizing supply chain management by automating data analysis, insight generation, and scenario planning. Drawing on Microsoft's cloud business experience, the authors demonstrate the potential of LLMs to significantly reduce decision-making time and enhance productivity for business planners and executives.

Read more »

AI Is Breaking Free From Token-Based LLMsAdvancements in AI are pushing towards AGI (Artificial General Intelligence) by moving beyond token-based LLMs to larger concept models that can understand and reason with entire sentences and concepts. This evolution is marked by increasingly sophisticated AI systems like OpenAI's GPT models, which demonstrate human-like reasoning and problem-solving abilities, even excelling in complex mathematical competitions. Experts believe AGI will define the future of human progress.

AI Is Breaking Free From Token-Based LLMsAdvancements in AI are pushing towards AGI (Artificial General Intelligence) by moving beyond token-based LLMs to larger concept models that can understand and reason with entire sentences and concepts. This evolution is marked by increasingly sophisticated AI systems like OpenAI's GPT models, which demonstrate human-like reasoning and problem-solving abilities, even excelling in complex mathematical competitions. Experts believe AGI will define the future of human progress.

Read more »