It is important to understand the source of errors in AI so as to mitigate them in the most appropriate manner. Generative AI and Classical AI err differently.

As we deploy Generative AI models to synthesize information, generate content and serve as our copilots, it is important to understand how and why these models may produce errors. More importantly, we must be prepared for entirely different categories of errors compared to those produced by Classical AI models. For the purposes of this article, by Classical AI we mean AI systems that use rules and logic to mimic human intelligence.

Classical AI models are primarily used to analyze data and make predictions. What is a customer likely to consume next? What is the best action a salesperson should take with a customer at that moment in time? Which customers are at risk of churn, why, and how can we mitigate such risks? Classical AI algorithms process data and return expected results such as analyses or predictions. They are trained on data that can help them do that task exceedingly well.

Generative AI models, on the other hand, are prebuilt to serve as a foundation for a range of applications. Generative AI entails training one model on a huge amount of data, then adapting it to many applications. The data can be formatted as text, images, speech, and more. The tasks that such models can perform include – but are not limited to – answering questions, analyzing sentiments, extracting information from text, labeling images, and recognizing objects.

Predictably, Classical AI and Generative AI use different paradigms for training. Classical AI focuses on a narrow task and is therefore trained on a well-defined and curated dataset, whereas Generative AI focuses on large volumes of public data to learn the language and all information within it. Due to these differences in data curation, and other differences encompassing AI training and consumption of outputs, the point of failure is different for Classical and Generative AI.

Meanwhile, Generative AI models are trained on a diverse range of data. But if an input is given to them that deviates significantly from what they have trained on, they might produce inaccurate or nonsensical outputs. You might also get inaccurate information if you ask such a model about a topic that originated after its training data. And, of course, biased or ambiguous questions can lead Generative AI models astray.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Upping Your Prompt Engineering Superpowers Via Target-Your-Response Techniques When Using Generative AIA vital technique for prompt engineering entails thinking ahead and telling generative AI what kind of response you are expecting to get. Here is the insider scoop.

Upping Your Prompt Engineering Superpowers Via Target-Your-Response Techniques When Using Generative AIA vital technique for prompt engineering entails thinking ahead and telling generative AI what kind of response you are expecting to get. Here is the insider scoop.

Read more »

How Google Cloud Is Leveraging Generative AI To Outsmart CompetitionIt has a smaller 256-chip footprint per Pod, which is optimized for the state-of-the-art neural network architecture based on the transformer architecture.

How Google Cloud Is Leveraging Generative AI To Outsmart CompetitionIt has a smaller 256-chip footprint per Pod, which is optimized for the state-of-the-art neural network architecture based on the transformer architecture.

Read more »

Ramaswamy claims he would've handled Jan. 6 differently than TrumpRepublican presidential candidate Vivek Ramaswamy said he would have handled the Capitol riot on Jan. 6, 2021, “very differently” than former President Donald Trump, but emphasized a distinction between “bad behavior and illegal behavior.”

Ramaswamy claims he would've handled Jan. 6 differently than TrumpRepublican presidential candidate Vivek Ramaswamy said he would have handled the Capitol riot on Jan. 6, 2021, “very differently” than former President Donald Trump, but emphasized a distinction between “bad behavior and illegal behavior.”

Read more »

These 90 Day: Last Resort Cast Members Act Shockingly Different Than They Used To90 Day: The Last Resort cast acts differently.

These 90 Day: Last Resort Cast Members Act Shockingly Different Than They Used To90 Day: The Last Resort cast acts differently.

Read more »

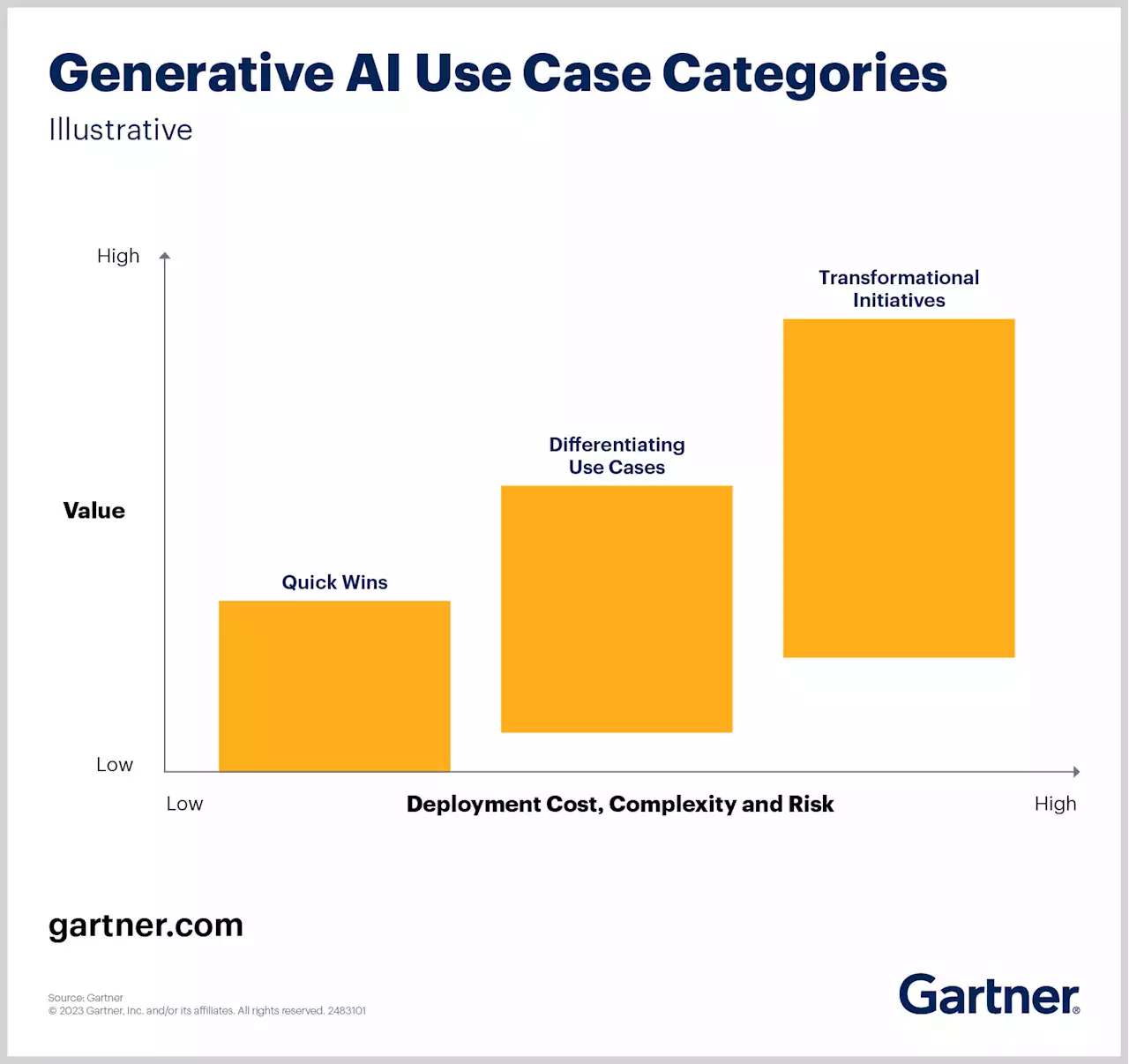

Measuring the ROI of GenAI: Assessing Value and CostTo properly evaluate generative AI investments, measure both financial ROI and nonfinancial benefits, such as competitive advantage across your GenAI portfolio.

Measuring the ROI of GenAI: Assessing Value and CostTo properly evaluate generative AI investments, measure both financial ROI and nonfinancial benefits, such as competitive advantage across your GenAI portfolio.

Read more »