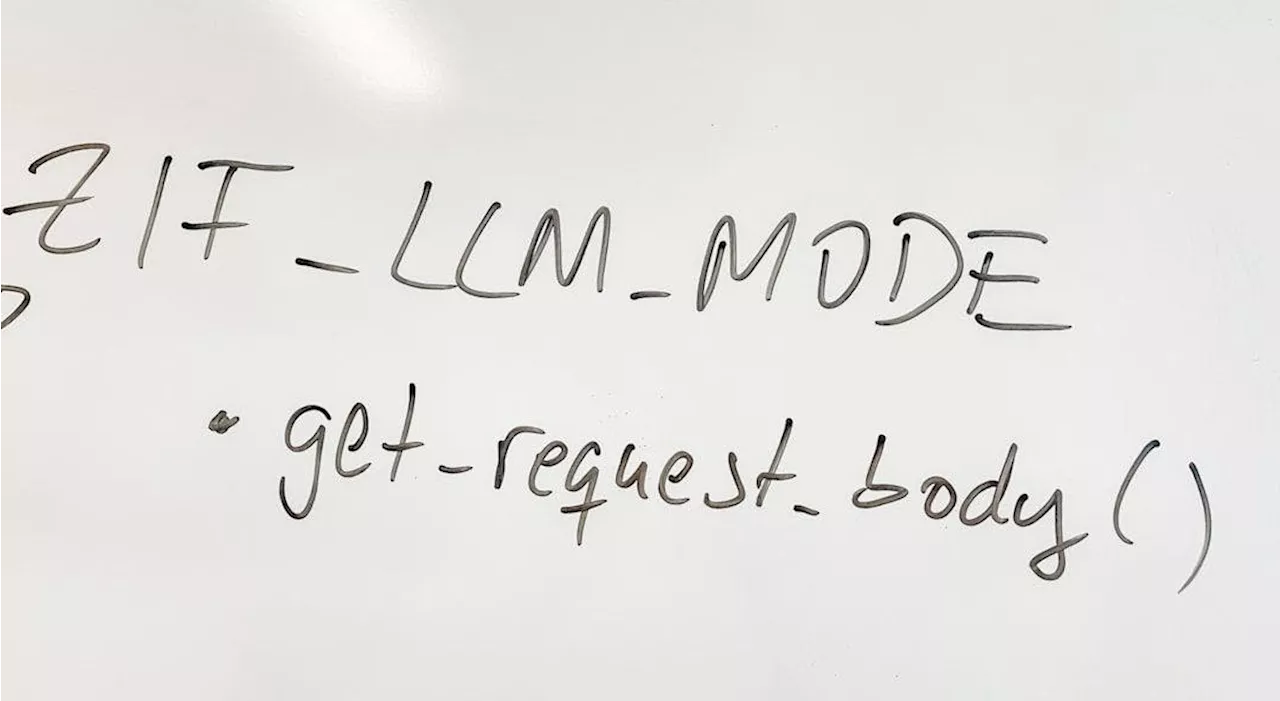

Researchers propose TOOLDEC, a finite-state machine-guided decoding for LLMs, reducing errors and improving tool use.

Authors: Kexun Zhang, UC Santa Barbara and Equal contribution; Hongqiao Chen, Northwood High School and Equal contribution; Lei Li, Carnegie Mellon University; William Yang Wang,UC Santa Barbara. Table of Links Abstract and Intro Related Work ToolDec: LLM Tool Use via Finite-State Decoding Experiment: ToolDec Eliminates Syntax Errors Experiment: ToolDec Enables Generalizable Tool Selection Conclusion and References Appendix 4.

’s performance separately for the two baselines using the benchmarks from the original papers. Through extensive experiments, we show that . Since ToolkenGPT uses special tokens to call tools, enhances in-context learning tool LLMs. Table 3 shows completely eliminated all three errors. With function name hallucination being the most prevalent tool-related error, a slightly better baseline was to mitigate it with fuzzy matching by suffix. We present the results of the baseline with fuzzy matching as ToolLLM + Fuzzy Matching, and without as ToolLLM.

increased ToolkenGPT’s accuracy while being much faster in inference. Although ToolkenGPT + backtrace achieved slightly better accuracy than In this section, we show that can completely eliminate syntactic errors, resulting in better accuracy and shorter inference time. 4.1 BASELINES AND BENCHMARKS ToolLLM . ToolLLM is an in-context learning approach to tool-augmented language models. It utilizes an instruction-tuned LLaMA-7B model to use tools. Given the natural language instruction of a tool-dependent task, an API retriever first retrieves a small subset of relevant functions.

. Since ToolkenGPT uses special tokens to call tools, . 4.3 EXPERIMENTAL RESULTS eliminated all three types of tool-related errors and achieved the best win rate and pass rate, even beating ChatGPT. is also beneficial to fine-tuned tool LLMs.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Can LLMs have a "dream-like" state to uniquely facilitate creativity?Explore the intriguing parallels between the hypnagogic state and AI creativity.

Can LLMs have a "dream-like" state to uniquely facilitate creativity?Explore the intriguing parallels between the hypnagogic state and AI creativity.

Read more »

Using LLMs to Correct Reasoning Mistakes: Related Works That You Should Know AboutThis paper explores few-shot in-context learning methods, which is typically used in realworld applications with API-based LLMs

Using LLMs to Correct Reasoning Mistakes: Related Works That You Should Know AboutThis paper explores few-shot in-context learning methods, which is typically used in realworld applications with API-based LLMs

Read more »

LLMs, with their vast corpora and speed, redefine the essence of cognition."Thinking at a distance" with large language models sparks human-AI cognitive capacity transcending biological limits, but it risks existential "entangled mind" miscalibration.

LLMs, with their vast corpora and speed, redefine the essence of cognition."Thinking at a distance" with large language models sparks human-AI cognitive capacity transcending biological limits, but it risks existential "entangled mind" miscalibration.

Read more »

Saturday Citations: The sound of music, sneaky birds, better training for LLMs. Plus: Diversity improves researchIn the small fishing village where I grew up, we didn't have much. But we helped our neighbors, raised our children to respect the sea, and embraced an inclusive scientific methodology with a cross section of sex, race and gender among study participants that enriched the results of our research.

Saturday Citations: The sound of music, sneaky birds, better training for LLMs. Plus: Diversity improves researchIn the small fishing village where I grew up, we didn't have much. But we helped our neighbors, raised our children to respect the sea, and embraced an inclusive scientific methodology with a cross section of sex, race and gender among study participants that enriched the results of our research.

Read more »

LLMs Cannot Find Reasoning Errors, but They Can Correct Them!In this paper, we break down the self-correction process into two core components: mistake finding and output correction.

LLMs Cannot Find Reasoning Errors, but They Can Correct Them!In this paper, we break down the self-correction process into two core components: mistake finding and output correction.

Read more »

LLMs: Neuroscience Research for AI Alignment and SafetyDiscover innovative approaches to enhance large language models by incorporating new mathematical functions and correction layers, inspired by human cognition.

LLMs: Neuroscience Research for AI Alignment and SafetyDiscover innovative approaches to enhance large language models by incorporating new mathematical functions and correction layers, inspired by human cognition.

Read more »