Dr. Lance B. Eliot is a world-renowned AI scientist and consultant with over 8.1+ million amassed views of his AI columns and been featured on CBS 60 Minutes. As a CIO/CTO seasoned executive and high-tech entrepreneur, he combines practical industry experience with deep academic research.

, namely that based on predictions of artificial general intelligence presumably nearing attainment, we ought to right away be giving due consideration to the upcoming well-being and overall welfare of AI. The deal is this. If AI is soon to be sentient, then we ought to be worrying about whether this sentient AI is being treated well and how we can ensure that suitable and proper humanitarian treatment of AI is undertaken.

Therefore, if you believe that AGI is just around the corner, we ought to be proceeding full speed ahead on the AI welfare preparations needed. A zany game of one-upmanship has grabbed hold of the predictions for the date of AGI. If one person says it will be the year 2050, someone else gets brash headlines by saying the date is going to be 2035. The next person who wants headlines will top that by saying it is 2030. This gambit keeps happening. Some are even predicting that 2025 will be the year of AGI.

If AGI does arrive, the odds are that more than just one person will be needed in a given organization to suitably undertake AI welfare duties.

Third, the need for AI ethics officers and similar positions is already somewhat dicey in that firms at first were interested in hiring for such roles, and then the energy dissipated. Daily reports of AI ethics and AI safety teams being dismantled are happening right and left. Instead of hiring AI welfare overseers, firms should once again be hiring and retaining AI ethics and AI safety personnel. Shift the excitement of AI welfare back over to the AI ethics and AI safety personnel.

The gist is that AGI is going to undoubtedly be placed into positions where a semblance of ethics and ethical choices are going to be made. How will AGI make those choices? Some assert that we need to take away any discretion and somehow articulate every possibility to the AGI, thus, the AGI has no discretion.

Generative AI Large Language Models Llms Artificial General Intelligence AGI AI Welfare Officers Overseers AI Ethics Law Openai Chatgpt GPT-4O O1 Anthropic Claude Google Gemini Meta Llama Humanity Existential Risk

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Marvel Officially Debuts the Manga Version of Carnage, & He's Officially the Most Disturbing Symbiote YetManga Carnage Debuts in white and red

Marvel Officially Debuts the Manga Version of Carnage, & He's Officially the Most Disturbing Symbiote YetManga Carnage Debuts in white and red

Read more »

‘I honestly thought it was all over,’ and other worrying quotes of the weekQuotes from the week ending in Nov. 1, 2024

‘I honestly thought it was all over,’ and other worrying quotes of the weekQuotes from the week ending in Nov. 1, 2024

Read more »

Todd’s Take: Learn To Stop Worrying And Love The Transfer PortalWhere would Indiana athletics be without the unloved transfer portal? Many fans may not like it, but it’s had a transformative effect on the Hoosiers.

Todd’s Take: Learn To Stop Worrying And Love The Transfer PortalWhere would Indiana athletics be without the unloved transfer portal? Many fans may not like it, but it’s had a transformative effect on the Hoosiers.

Read more »

Stop Worrying So Much About Holiday Weight GainFlorida resident Joshua Walker isn’t concerned his health will take a hit from all the cakes, pies, cookies and candy that will tempt him during holiday gatherings.

Stop Worrying So Much About Holiday Weight GainFlorida resident Joshua Walker isn’t concerned his health will take a hit from all the cakes, pies, cookies and candy that will tempt him during holiday gatherings.

Read more »

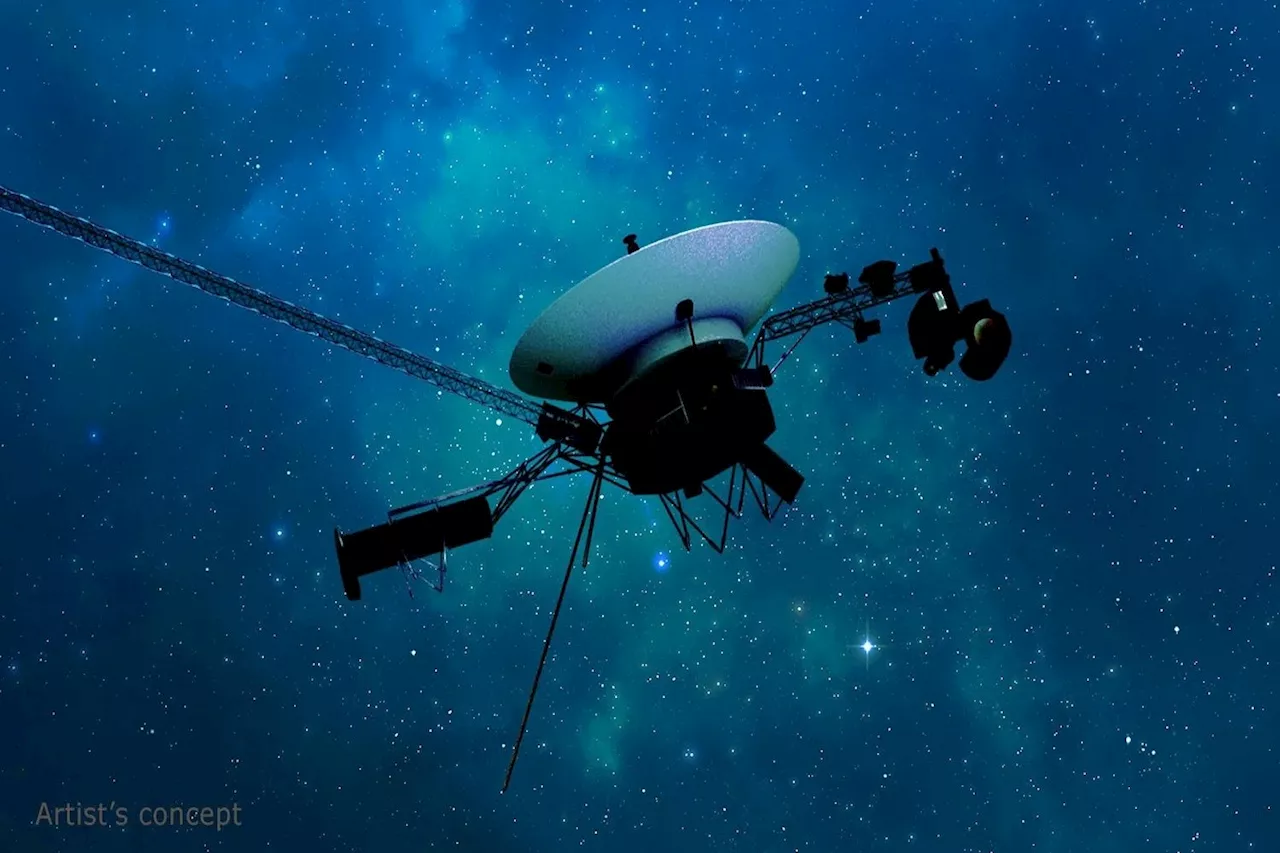

NASA’s Voyager 1 Finally Phones Home After Worrying Communications GlitchThe spacecraft was forced to rely on a radio transmitter that hadn't been used in 43 years.

NASA’s Voyager 1 Finally Phones Home After Worrying Communications GlitchThe spacecraft was forced to rely on a radio transmitter that hadn't been used in 43 years.

Read more »

Mohamed Salah Gives Worrying Update on Liverpool FutureMohamed Salah is disappointed in the lack of progress on a new contract at Liverpool.

Mohamed Salah Gives Worrying Update on Liverpool FutureMohamed Salah is disappointed in the lack of progress on a new contract at Liverpool.

Read more »