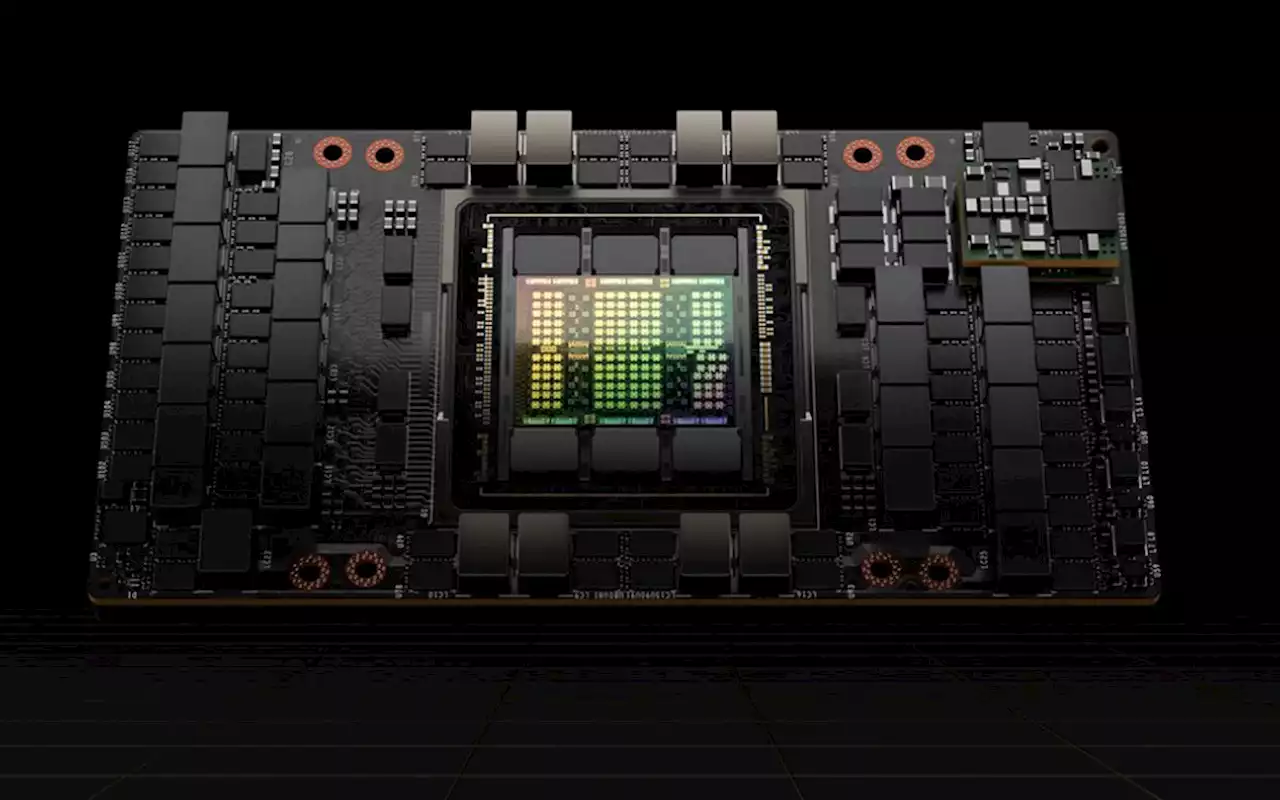

Nvidia Hopper Brings Massive Scale and Changes to CUDA at GTC 2022. The H100 chip has a massive 80 billion transistors in TSMC’s special 4nm process optimized for Nvidia. To go with Hopper, Nvidia will also release Arm-based Grace next year.

The architecture was inspired by the next generation of massive AI training models, called transformers. Nvidia has built in a new processing unit, the Transformer Engine, for transformers much as it did for tensors in the past. The transformer engine adaptively and dynamically processes training or inference data using Nvidia’s new 8-bit floating point operations.

In addition to higher performing fp8, the Hopper Tensor Engine is twice the performance of Ampere for all other data formats. Hopper also offers higher clock speeds, more memory bandwidth and more caches relative to Ampere, bring the total performance uplift to three times Ampere.In an important development for security and workload isolation, the H100 chip has added support for confidential computing, making it the only GPU to support it.

Because Nvidia always considers data center scaling in its architectural planning, the H100 has a new 4th generation of NVLink for coherent GPU-to-GPU that can extend across chassis. Nvidia has a new NVLink switch that can scale up to 256 GPUs, 32 times the size of the previous NVLink domain. The bisectional bandwidth, at 70TB/s is 11 times that of its predecessor.

There’s also a new NVLink-C2C interface which will allow connection between Nvidia chips, but also can be used to connect third party custom silicon to Nvidia chips. The company claims it’s 25 time more power efficient and 90 time more area efficient than PCIe Gen 5 PHY. And while it is a very visible holdout to joining Intel’s new UCIe standard for chip-to-chip interconnect, Nvidia said it would support the standard. Terms for third parties to access NVLink-C2C were not revealed.

Nvidia will offer the H100 in multiple platforms starting at a PCIe card, an Nvidia DGX H100 rack mounted server and scaling up to a DGX SuperPOD with 32 connected DGX H100 servers. There will also be an H100 CNX Converged Accelerator, which not only adds an H100 GPU to a mainstream server, but also adds high-performance networking with a ConnectX7 smartNIC on the same PCIe card.The H100 systems ship starting in 3Q2022. The H100 is designed for air and water cooled system solutions up to 700W.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Eight Takeaways From NVIDIA GTCThe annual GTC Keynote with Jensen Huang just concluded. Here are eight takeaways adopters, competitors, and investors should think about.

Eight Takeaways From NVIDIA GTCThe annual GTC Keynote with Jensen Huang just concluded. Here are eight takeaways adopters, competitors, and investors should think about.

Read more »

Nvidia unveils latest chips, technology to speed up AI computingNvidia Corp on Tuesday announced several new chips and technologies that it said will boost the computing speed of increasingly complicated artificial intelligence algorithms, stepping up competition against rival chipmakers vying for lucrative data center business.

Nvidia unveils latest chips, technology to speed up AI computingNvidia Corp on Tuesday announced several new chips and technologies that it said will boost the computing speed of increasingly complicated artificial intelligence algorithms, stepping up competition against rival chipmakers vying for lucrative data center business.

Read more »

NVIDIA Unleashes Arsenal Of AI Innovation With Hopper GPU, Grace CPU, Interconnect And Robotics TechThis year's NVIDIA's GTC keynote was actually a live demo, delivered from the simulated constructs of the metaverse, and the announcements in silicon and AI innovation were impressive.

NVIDIA Unleashes Arsenal Of AI Innovation With Hopper GPU, Grace CPU, Interconnect And Robotics TechThis year's NVIDIA's GTC keynote was actually a live demo, delivered from the simulated constructs of the metaverse, and the announcements in silicon and AI innovation were impressive.

Read more »

NVIDIA says its new H100 datacenter GPU is up to six times faster than its last | EngadgetAt GTC 2022, CEO Jensen Huang unveiled the Grace CPU Superchip, the first discrete CPU NVIDIA plans to release as part of its Grace lineup..

NVIDIA says its new H100 datacenter GPU is up to six times faster than its last | EngadgetAt GTC 2022, CEO Jensen Huang unveiled the Grace CPU Superchip, the first discrete CPU NVIDIA plans to release as part of its Grace lineup..

Read more »

Eight Takeaways From NVIDIA GTCThe annual GTC Keynote with Jensen Huang just concluded. Here are eight takeaways adopters, competitors, and investors should think about.

Eight Takeaways From NVIDIA GTCThe annual GTC Keynote with Jensen Huang just concluded. Here are eight takeaways adopters, competitors, and investors should think about.

Read more »