In this paper we study how the “semantic guesswork” produced by language models can be utilized as a guiding heuristic for planning algorithms.

This is paper is available on arxiv under CC 4.0 DEED license. Authors: Dhruv Shah, UC Berkeley and he contributed equally; Michael Equi, UC Berkeley and he contributed equally; Blazej Osinski, University of Warsaw; Fei Xia, Google DeepMind; Brian Ichter, Google DeepMind; Sergey Levine, UC Berkeley and Google DeepMind.

Authors: Authors: Dhruv Shah, UC Berkeley and he contributed equally; Michael Equi, UC Berkeley and he contributed equally; Blazej Osinski, University of Warsaw; Fei Xia, Google DeepMind; Brian Ichter, Google DeepMind; Sergey Levine, UC Berkeley and Google DeepMind. Table of Links Abstract & Introduction Related Work Problem Formulation and Overview LFG: Scoring Subgoals by Polling LLMs LLM Heuristics for Goal-Directed Exploration System Evaluation Discussion and References A.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Navigation with Large Language Models: LLM Heuristics for Goal-Directed ExplorationIn this paper we study how the “semantic guesswork” produced by language models can be utilized as a guiding heuristic for planning algorithms.

Navigation with Large Language Models: LLM Heuristics for Goal-Directed ExplorationIn this paper we study how the “semantic guesswork” produced by language models can be utilized as a guiding heuristic for planning algorithms.

Read more »

Navigation with Large Language Models: LFG: Scoring Subgoals by Polling LLMsIn this paper we study how the “semantic guesswork” produced by language models can be utilized as a guiding heuristic for planning algorithms.

Navigation with Large Language Models: LFG: Scoring Subgoals by Polling LLMsIn this paper we study how the “semantic guesswork” produced by language models can be utilized as a guiding heuristic for planning algorithms.

Read more »

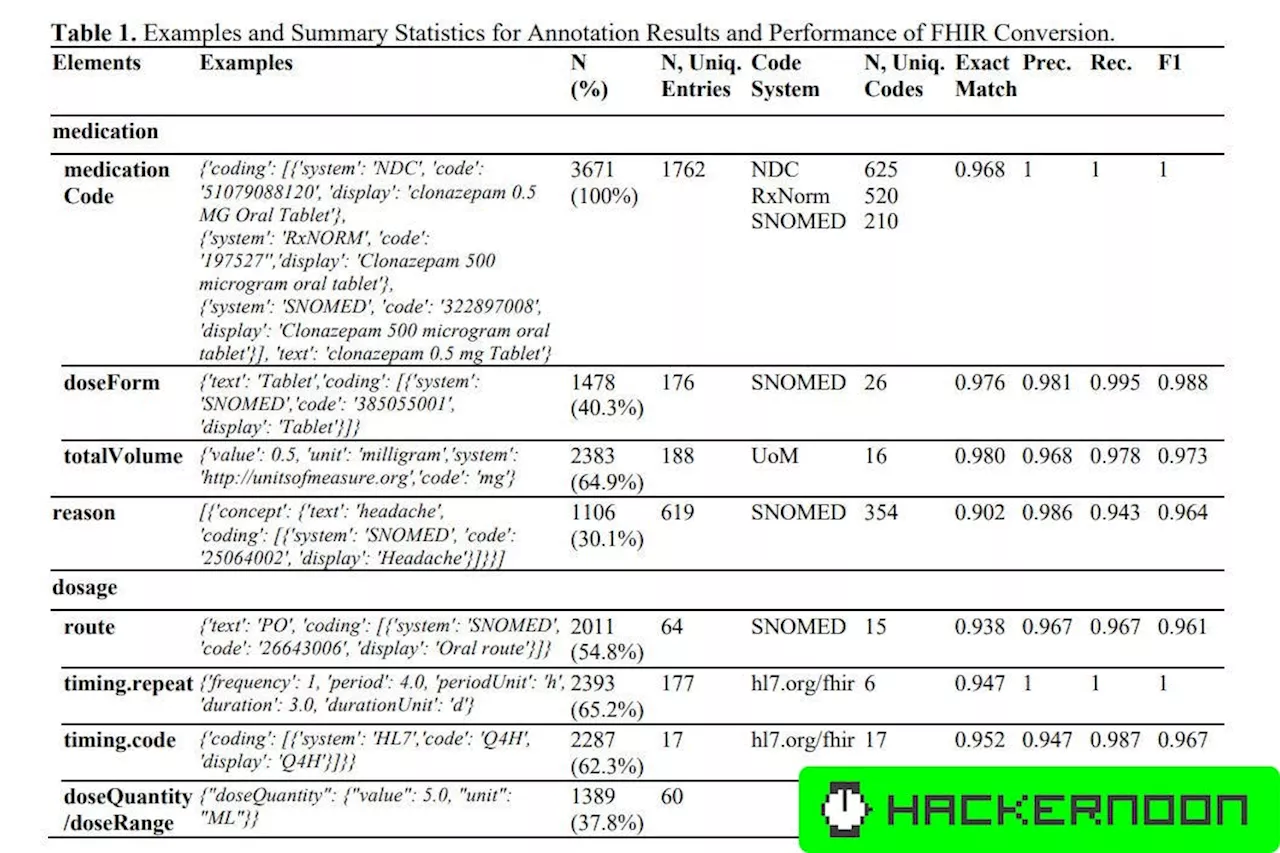

Enhancing Health Data Interoperability with Large Language Models: A FHIR StudyDiscover how LLMs revolutionize healthcare by directly transforming unstructured clinical notes into Fast Healthcare Interoperability Resources (FHIR).

Enhancing Health Data Interoperability with Large Language Models: A FHIR StudyDiscover how LLMs revolutionize healthcare by directly transforming unstructured clinical notes into Fast Healthcare Interoperability Resources (FHIR).

Read more »

Revolutionizing Software Development With Large Language ModelsSon Nguyen is the co-founder & CEO of Neurond AI, a company providing world-class Artificial Intelligence and Data Science services. Read Son Nguyen's full executive profile here.

Revolutionizing Software Development With Large Language ModelsSon Nguyen is the co-founder & CEO of Neurond AI, a company providing world-class Artificial Intelligence and Data Science services. Read Son Nguyen's full executive profile here.

Read more »

Large Language Models’ Emergent Abilities Are a MirageA new study suggests that sudden jumps in LLMs’ abilities are neither surprising nor unpredictable, but are actually the consequence of how we measure ability in AI.

Large Language Models’ Emergent Abilities Are a MirageA new study suggests that sudden jumps in LLMs’ abilities are neither surprising nor unpredictable, but are actually the consequence of how we measure ability in AI.

Read more »

Breakthrough Behavior in Large Language ModelsResearchers have discovered breakthrough behavior in large language models, which has implications for AI safety and potential.

Breakthrough Behavior in Large Language ModelsResearchers have discovered breakthrough behavior in large language models, which has implications for AI safety and potential.

Read more »