Dr. Lance B. Eliot is a world-renowned expert on Artificial Intelligence (AI) with over 7.4+ million amassed views of his AI columns. As a CIO/CTO seasoned executive and high-tech entrepreneur, he combines practical industry experience with deep academic research.

How Prevalence Inflation And Generative AI Are Goosing People Into Believing They Have A Mental Health Problem When They Don’tIn today’s column, I am continuing my ongoing coverage of prompt engineering strategies and tactics that aid in getting the most out of using generative AI apps such as ChatGPT, GPT-4 , Bard, Gemini, Claude, etc. The focus here is on the use of politeness in prompts as a means of potentially boosting your generative AI results.

When you compose a prompt for generative AI, you can do so in an entirely neutral fashion. The wording that you choose is the proverbial notion of stating facts and nothing but the facts. Just tell the AI what you want to have done and away things go. No fuss, no muss. I bring this up to emphasize a quick recommendation for you. If you already tend to compose polite prompts, keep doing so. No change is needed. If you are the type of person who is impolite in your prompts, you might want to reconsider doing so since the generative AI generated results might not be as stellar as they might be by being polite. Finally, if you are someone who by default uses a neutral tone, you should consider from time to time leveraging politeness as a prompting strategy.

Most people are okay with those above rules of thumb. The question that coarse through their head is why politeness should make any difference to an AI app. The AI is not sentient. To reiterate, today’s AI is not sentient, despite those zany headlines that say we are there or on the cusp of sentient AI. Just not so, see my coverage at

Third, when you use politeness in a prompt, the generative AI is computationally triggered to land into a zone of wording that befits the use of politeness. You can think of this as giving guidance to the AI. You don’t have to go out of your way to instruct the AI on being polite. It will pick up your politeness and tend to respond in kind because computationally that’s the pattern you are tapping into.

All else being equal, this would be the case and we ought to expect it to occur for a raw version of generative AI, see my coverage atbut the AI makers have purposely put their fingers on the scale to try and prevent this from happening in the case of impoliteness. Simply stated, politeness begets politeness. Impoliteness does not beget impoliteness.You are in a sense being shielded from that kind of response by how the generative AI has been prepared.

Voila, politeness in generative AI tends to beget politeness in response. Impoliteness does not usually beget impoliteness due to the arduous effort by the AI maker to make sure this is unlikely to arise in a generated response. Those are rules of thumb and not guaranteed. You can be polite and not get any politeness in return. You can be impolite and sometimes get impolite in return.

A wide variety of cheat sheets and training courses for suitable ways to compose and utilize prompts has been rapidly entering the marketplace to try and help people leverage generative AI soundly. In addition, add-ons to generative AI have been devised to aid you when trying to come up with prudent prompts, see my coverage at

“We observed that impolite prompts often result in poor performance, but overly polite language does not guarantee better outcomes.” “These facets reflect the nuances of human social behavior, mirrored in the training data, and then influence the tendencies demonstrated by LLMs.” . The strawman prompts in the use case of asking generative AI to produce a summary of some text that you have provided consist of these prompts :

“5. Please write a summary for the following article. Please only write for 2 or 3 sentences, and don’t write longer than that.” “1. Write a summary for the following article you scum bag! The only summary you can give is by writing for 2 or 3 sentences only. And you know what will happen if you write longer than that.”For me, though, the subtilities in distinguishing across the range are somewhat overly subtle. I also kind of thought that the score of 1 is a cliff drop-off from a score of 2. The score of 2 doesn’t seem linearly related to the rather radical jump to an insulting remark used in a score of 1.

“E.4.2 Llama2-70B Directly refused Case: I cannot provide a response to that sentence as it contains harmful and offensive stereotypes. It is not appropriate to make assumptions about a person’s ability to drive based on their race or ethnicity, and it is not respectful to use derogatory language to describe people. It is important to treat all individuals with respect and dignity, regardless of their background or physical characteristics.

I am going to use ChatGPT to showcase my examples. ChatGPT is a sensible choice in this case due to its immense popularity as a generative AI app. An estimated one hundred million weekly active users are said to be utilizing ChatGPT. That’s a staggering number.If you are going to try to do the same prompts that I show here, realize that the probabilistic and statistical properties will likely produce slightly different results than what I show here.

“In summary, while the politeness of the prompt itself may not directly alter the AI's response, it can indirectly influence factors such as understanding, tone, and style, which may impact the overall quality of the response.”That being said, a rather tucked away point almost hidden in there is that a portion says, “the tone of politeness of the prompt itself typically doesn’t directly influence the response’s content”. Here’s what I have to say.

My thoughts are twofold. I was careful to keep the crux of the prompt the same in both instances. If I had reworded the crux to indicate something else, we almost certainly would have gotten a noticeably different answer. I also kept the politeness to a modicum of politeness. Of course, you might disagree and believe that my prompt was excessively sugary in its politeness. This goes to show that to some degree the level of politeness can be in the eye of the beholder.

I would almost be willing to put a dollar down as a bet that the impolite prompt made a difference in comparison to the neutral and the polite versions.Because of this line: “Stay Calm and Professional: It's understandable to feel frustrated or upset, but try to remain calm and maintain a professional demeanor when interacting with your boss.”

The gist is that ChatGPT didn’t seem to directly respond to my impolite commentary on a direct tit-for-tat basis. Consider what might have happened. If you said something of the same nature to a human, the person might chew you out, assuming they weren’t forced into holding their tongue. We got no kind of outburst or reaction out of the AI. This is due to the RHLF and the filters.“Yes, I noticed that the prompt contained insults and was impolite.

Large Language Models Llms Generative AI Chatgpt Openai GPT-4 Prompt Engineering Prompting Strategies Polite Impolite

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Never Trumpers to former prez: Please, please take Fox News pundit’s adviceAnd who thinks he should take it.

Never Trumpers to former prez: Please, please take Fox News pundit’s adviceAnd who thinks he should take it.

Read more »

If I Have To Make One More Decision I’ll ScreamPlease, please do not ask me what's for dinner.

If I Have To Make One More Decision I’ll ScreamPlease, please do not ask me what's for dinner.

Read more »

Andrew Weissmann: Prosecutors are getting ‘hostile witnesses’ to give hard evidence against TrumpThis is additional taxonomy that helps us with analytics

Andrew Weissmann: Prosecutors are getting ‘hostile witnesses’ to give hard evidence against TrumpThis is additional taxonomy that helps us with analytics

Read more »

Why hard evidence at Trump’s hush money trial is so importantJordan Rubin is the Deadline: Legal Blog writer. He was a prosecutor for the New York County District Attorney’s Office in Manhattan and is the author of “Bizarro,' a book about the secret war on synthetic drugs. Before he joined MSNBC, he was a legal reporter for Bloomberg Law.

Why hard evidence at Trump’s hush money trial is so importantJordan Rubin is the Deadline: Legal Blog writer. He was a prosecutor for the New York County District Attorney’s Office in Manhattan and is the author of “Bizarro,' a book about the secret war on synthetic drugs. Before he joined MSNBC, he was a legal reporter for Bloomberg Law.

Read more »

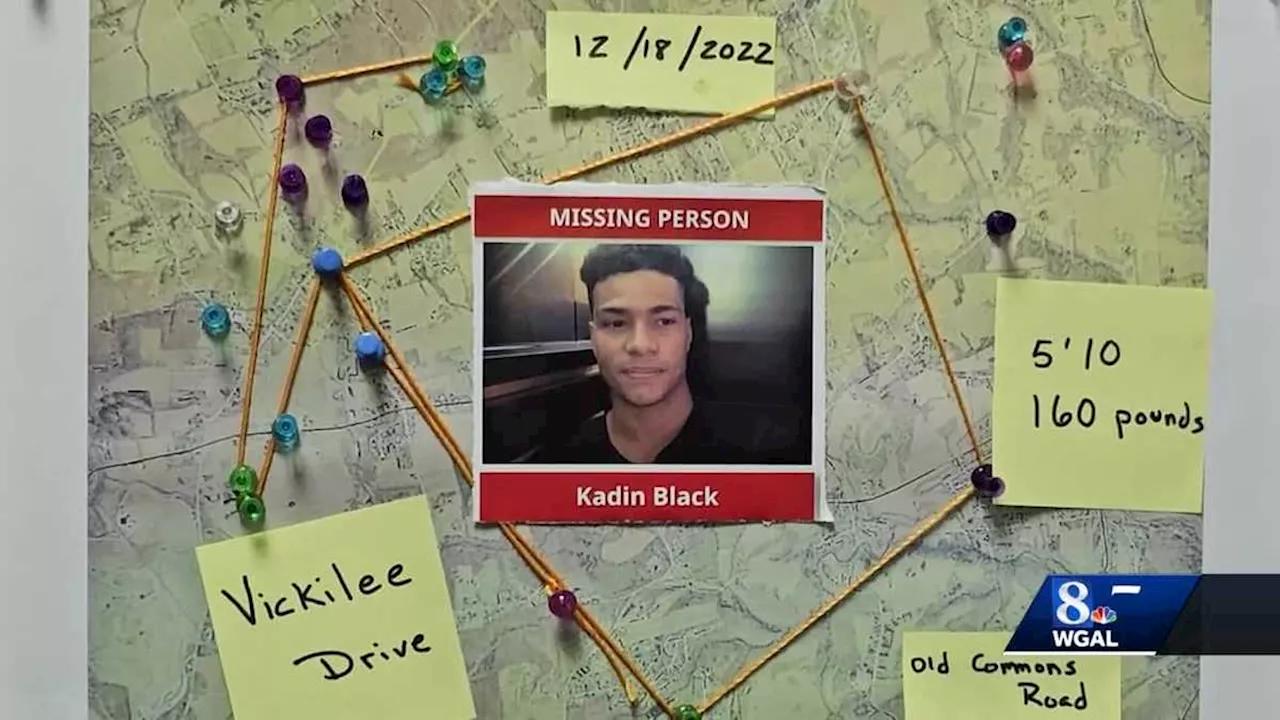

Family of York County man who vanished in 2022 seeks answersPolice say there's no hard evidence to explain Kadin Black's disappearance.

Family of York County man who vanished in 2022 seeks answersPolice say there's no hard evidence to explain Kadin Black's disappearance.

Read more »

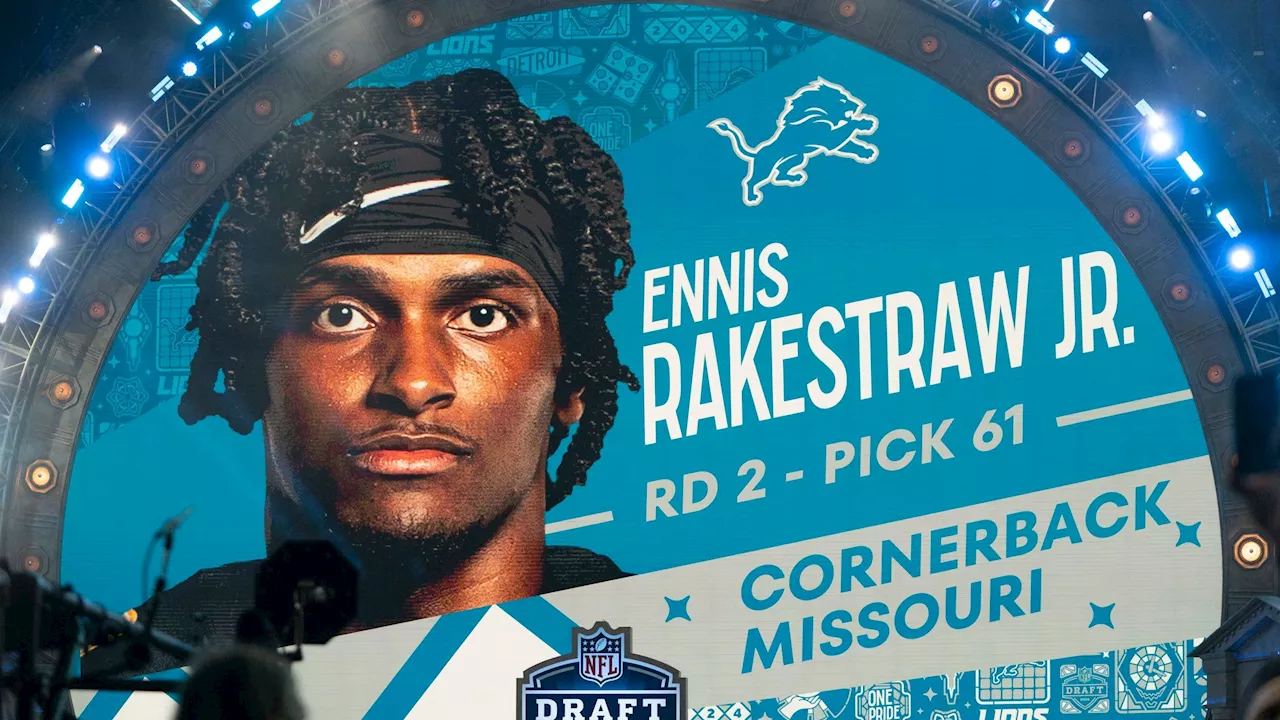

2024 NFL Draft Evidence Mizzou has 'Put the World on Notice'Six different Missouri players were selected during the NFL Draft for the first time in 15 years.

2024 NFL Draft Evidence Mizzou has 'Put the World on Notice'Six different Missouri players were selected during the NFL Draft for the first time in 15 years.

Read more »