Google has removed a commitment to refrain from using AI for potentially harmful applications, such as weapons and surveillance, according to its updated AI Principles. The change reflects a growing ambition to offer AI technology to a wider range of users, including governments, amidst increasing competition in the AI field.

Google has made a significant shift in its approach to artificial intelligence (AI) by removing a pledge to abstain from using AI for potentially harmful applications, such as weapons and surveillance. This change was revealed in the company's updated AI Principles, which now emphasize a focus on maximizing the benefits of AI while mitigating potential risks.

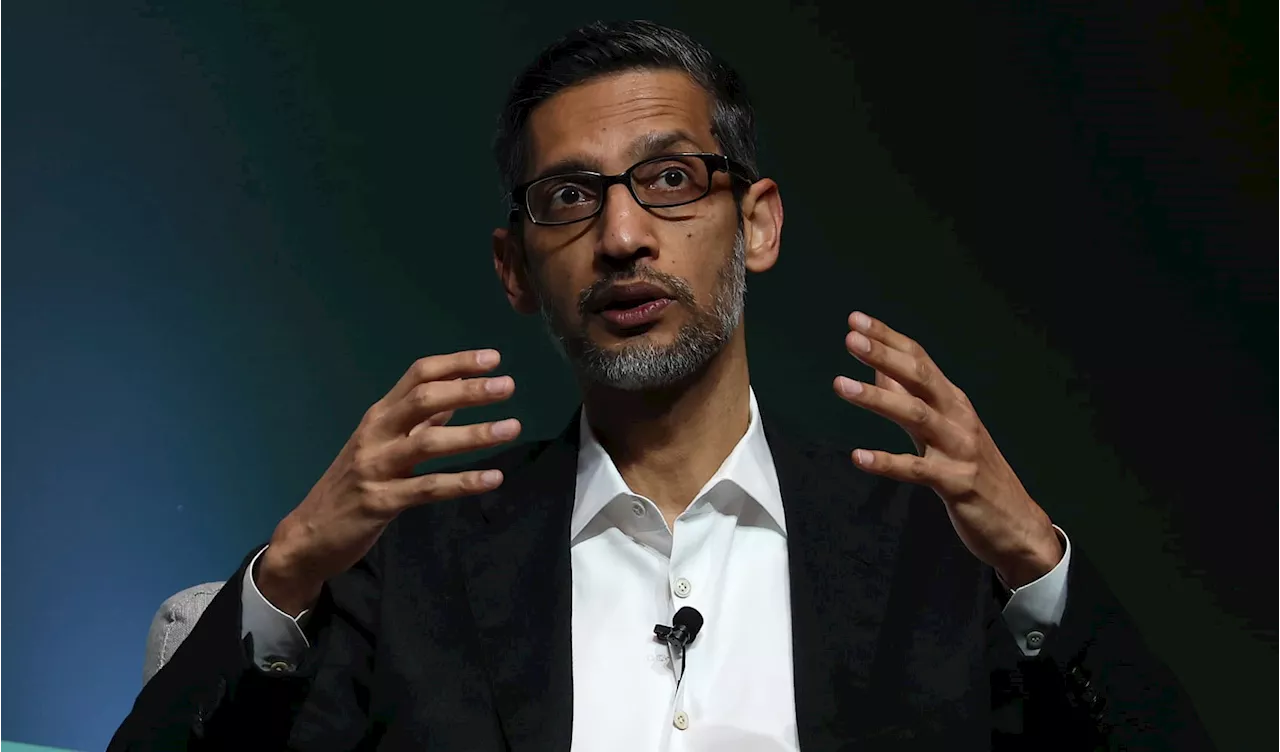

Previously, Google's AI principles explicitly stated that they would not develop or deploy AI for weapons or surveillance purposes that violated internationally accepted norms. However, the updated principles reflect a more nuanced stance, acknowledging the complexities of the global AI landscape and the potential for AI to be used both for good and for harm.The company's CEO of Google DeepMind, Demis Hassabis, highlighted the global competition for AI leadership in a blog post explaining the changes. He emphasized the importance of democracies leading in AI development, guided by core values like freedom, equality, and respect for human rights. Nevertheless, the shift in stance comes amidst growing concerns about the potential misuse of AI, particularly in the context of rising geopolitical tensions and the escalating AI arms race between the United States and China.This change in Google's AI principles has sparked both support and criticism. Some argue that the company's previous stance was too restrictive and hindered its ability to contribute to the advancement of AI for the benefit of society. Others, however, express concerns that removing the pledge to avoid harmful applications could lead to the development and deployment of AI systems that pose significant risks to human rights and global security. Google maintains that it will continue to operate within the bounds of international law and human rights, carefully evaluating the potential risks and benefits of each AI project.The company's past decisions regarding AI contracts, such as its initial involvement in Project Maven, which analyzed drone footage for the US military, and its subsequent withdrawal due to internal employee dissent, demonstrate the ongoing struggle to balance the potential benefits of AI with the ethical implications of its application. Google's updated AI principles reflect this ongoing tension and its attempt to navigate the complex ethical landscape of AI development in a rapidly changing world

Artificial Intelligence Google AI Principles Weapons Surveillance Ethics Geopolitics AI Development Competition Human Rights

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Google Broadens AI Principles, Drops Specific Commitments on Weapons and SurveillanceGoogle has updated its AI principles, removing specific pledges not to develop AI for weapons or surveillance. The company now emphasizes broader commitments to responsible development and deployment, aligning with human rights and international law.

Google Broadens AI Principles, Drops Specific Commitments on Weapons and SurveillanceGoogle has updated its AI principles, removing specific pledges not to develop AI for weapons or surveillance. The company now emphasizes broader commitments to responsible development and deployment, aligning with human rights and international law.

Read more »

Google Drops Pledge to Avoid Using AI for Weapons and SurveillanceGoogle has revised its AI Principles, removing a commitment to not use artificial intelligence for potentially harmful applications, such as weapons and surveillance. This change comes amidst heightened geopolitical tensions and a global race for AI dominance.

Google Drops Pledge to Avoid Using AI for Weapons and SurveillanceGoogle has revised its AI Principles, removing a commitment to not use artificial intelligence for potentially harmful applications, such as weapons and surveillance. This change comes amidst heightened geopolitical tensions and a global race for AI dominance.

Read more »

Google Drops Pledge to Avoid AI for Weapons and SurveillanceGoogle has removed a commitment from its AI Principles to refrain from using artificial intelligence for potentially harmful applications, such as weapons and surveillance. The company cites a global AI competition and the need to offer its technology to more users, including governments. This change reflects a shift in Google's approach, aligning with the increasing discourse about a US-China AI race.

Google Drops Pledge to Avoid AI for Weapons and SurveillanceGoogle has removed a commitment from its AI Principles to refrain from using artificial intelligence for potentially harmful applications, such as weapons and surveillance. The company cites a global AI competition and the need to offer its technology to more users, including governments. This change reflects a shift in Google's approach, aligning with the increasing discourse about a US-China AI race.

Read more »

Google Drops Pledge to Avoid Harmful AI ApplicationsGoogle has removed a commitment from its AI Principles to avoid using AI for potentially harmful purposes, such as weapons and surveillance. The company now states it will proceed with AI development where the benefits outweigh the risks, a shift from its previous stance of avoiding technologies that could cause harm. This change reflects Google's growing ambition to offer its AI technology to a wider range of users, including governments, amidst an increasingly competitive AI landscape.

Google Drops Pledge to Avoid Harmful AI ApplicationsGoogle has removed a commitment from its AI Principles to avoid using AI for potentially harmful purposes, such as weapons and surveillance. The company now states it will proceed with AI development where the benefits outweigh the risks, a shift from its previous stance of avoiding technologies that could cause harm. This change reflects Google's growing ambition to offer its AI technology to a wider range of users, including governments, amidst an increasingly competitive AI landscape.

Read more »

Google drops its pledge not to use AI for weapons or surveillanceThe company changed its public AI policies to remove assertions it would not develop AI applied to surveillance or weapons.

Google drops its pledge not to use AI for weapons or surveillanceThe company changed its public AI policies to remove assertions it would not develop AI applied to surveillance or weapons.

Read more »

Google Drops Pledge Against AI for Weapons and SurveillanceGoogle's updated AI ethics policy no longer includes a commitment to avoid developing applications for weapons or surveillance, marking a significant shift in the company's stance.

Google Drops Pledge Against AI for Weapons and SurveillanceGoogle's updated AI ethics policy no longer includes a commitment to avoid developing applications for weapons or surveillance, marking a significant shift in the company's stance.

Read more »