The emergence of DeepSeek, a Chinese startup with a powerful reasoning model comparable to ChatGPT, has sent shockwaves through the AI landscape. While DeepSeek's success has fueled China's AI ambitions and impacted the US stock market, it has also triggered anxieties about the rapid pace of AI development and the lack of robust safety measures. The resignation of another key OpenAI safety researcher, Steven Adler, amidst these developments further amplifies these concerns. Adler's departure, coupled with his stark warnings about the dangers of the AGI race and the need for greater focus on AI alignment, raises critical questions about the future of AI and its potential impact on humanity.

The recent AI landscape has been significantly influenced by DeepSeek, a Chinese startup that has made waves with its powerful reasoning model, DeepSeek R1. Despite not possessing the vast hardware and infrastructure resources of OpenAI, DeepSeek achieved comparable performance to ChatGPT by leveraging older chips and innovative software optimizations. Accusations from OpenAI that DeepSeek may have distilled ChatGPT's data to train precursors of R1 are seemingly inconsequential.

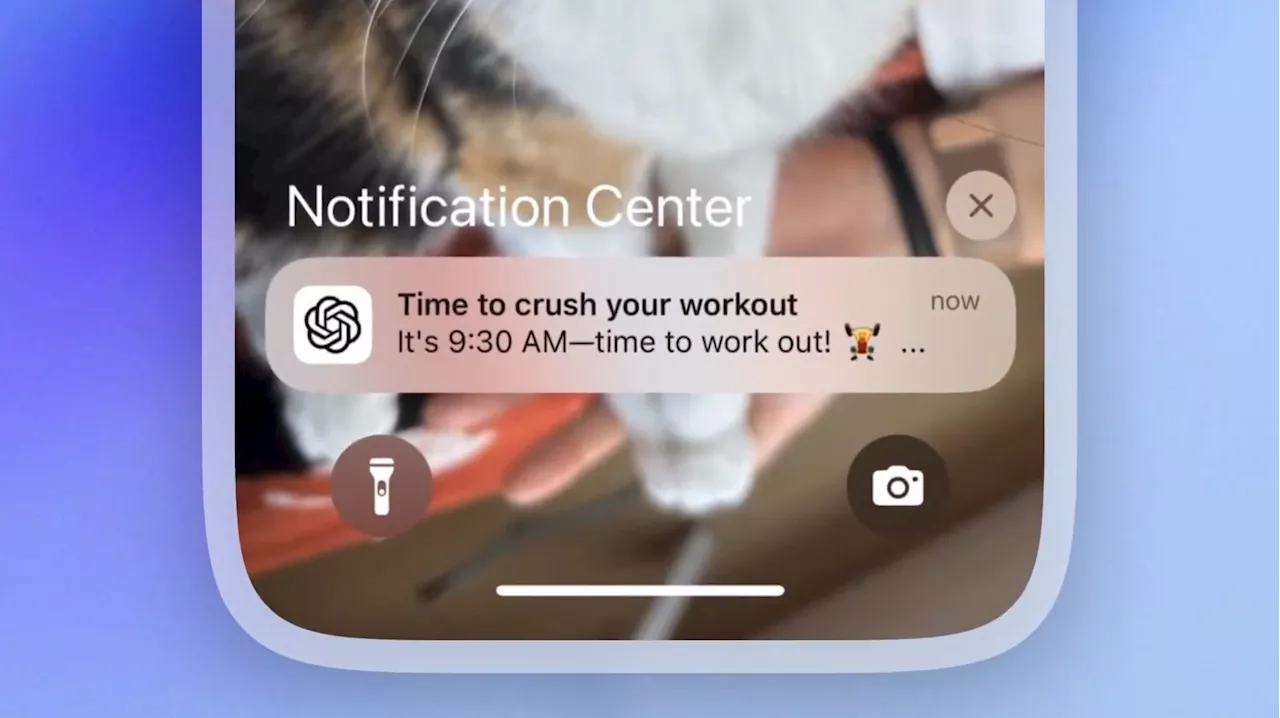

DeepSeek's project appears to have succeeded on multiple fronts. Firstly, it has leveled the playing field in the AI wars, giving China a formidable contender in the global AI race. Secondly, it inflicted a substantial financial blow on the US stock market, causing a near $1 billion loss, with AI hardware companies bearing the brunt of the market capitalization decline. Finally, DeepSeek has gifted China a potent software weapon, potentially even more influential than TikTok. The DeepSeek app has soared to the top spot on the App Store, and its open-source nature allows anyone to install the DeepSeek model on their computer and utilize it for developing other models. Amidst this whirlwind of developments, reports of another key OpenAI safety researcher, Steven Adler, resigning from the company might be overshadowed. This resignation adds to the growing list of engineers who have departed OpenAI within the past year. Adler's announcement, made public on Monday, coincided with the DeepSeek news that triggered the market crash, adding an intriguing layer to the timing. After four years at OpenAI, Adler expressed his gratitude for the experience, highlighting his involvement in various significant projects, including dangerous capability evaluations, agent safety and control, AGI, and online identity. However, his departure statement carried a bleak undertone, reflecting his deep concern about the rapid pace of AI development.Adler echoed the anxieties of other AI experts who foresee a dystopian future where AI surpasses human control. He candidly voiced his worries about the future, questioning if humanity would even survive to witness the next generation. Adler's concerns about the AGI race and the lack of solutions for AI alignment are particularly noteworthy. He cautioned that the relentless pursuit of AGI, without addressing its inherent risks, could lead to catastrophic consequences. His remarks suggest that he may have witnessed AGI research at OpenAI, a field the company is actively pursuing. Adler's departure raises several questions about the direction of AI development and the potential dangers of unchecked progress. It also underscores the importance of ethical considerations and responsible development practices in the field of artificial intelligence

AI AI Deepseek Openai AGI Alignment Ethics Safety China US Stock Market

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

DeepSeek AI Faces OpenAI Accusations of Data TheftChinese AI company DeepSeek has drawn scrutiny from OpenAI and Microsoft for allegedly building its AI models using OpenAI's data. OpenAI suspects DeepSeek may have utilized its API to extract and distill knowledge from OpenAI's models, violating its terms of service. Microsoft reportedly detected significant data exfiltration from OpenAI developer accounts linked to DeepSeek.

DeepSeek AI Faces OpenAI Accusations of Data TheftChinese AI company DeepSeek has drawn scrutiny from OpenAI and Microsoft for allegedly building its AI models using OpenAI's data. OpenAI suspects DeepSeek may have utilized its API to extract and distill knowledge from OpenAI's models, violating its terms of service. Microsoft reportedly detected significant data exfiltration from OpenAI developer accounts linked to DeepSeek.

Read more »

DeepSeek vs. ChatGPT: Hands On With DeepSeek’s R1 ChatbotDeekSeek’s chatbot with the R1 model is a stunning release from the Chinese startup. While it’s an innovation in training efficiency, hallucinations still run rampant.

DeepSeek vs. ChatGPT: Hands On With DeepSeek’s R1 ChatbotDeekSeek’s chatbot with the R1 model is a stunning release from the Chinese startup. While it’s an innovation in training efficiency, hallucinations still run rampant.

Read more »

China: AI’s Sputnik moment? A short Q and A on DeepSeekOn 20 January the Chinese start-up DeepSeek released its AI model DeepSeek-R1.

China: AI’s Sputnik moment? A short Q and A on DeepSeekOn 20 January the Chinese start-up DeepSeek released its AI model DeepSeek-R1.

Read more »

DeepSeek's ChatGPT Rival Sparks Controversy Over Training MethodsChinese startup DeepSeek has caused a stir with its AI model, DeepSeek R1, which rivals ChatGPT in capabilities but was trained at a fraction of the cost. While DeepSeek's achievement is impressive, OpenAI alleges that DeepSeek utilized unethical methods, specifically 'distillation,' by training R1 on data from ChatGPT. This raises concerns about intellectual property theft and potentially violates OpenAI's terms of service. The situation echoes previous controversies surrounding ChatGPT's own training data, further highlighting the ethical complexities in the rapidly evolving field of AI.

DeepSeek's ChatGPT Rival Sparks Controversy Over Training MethodsChinese startup DeepSeek has caused a stir with its AI model, DeepSeek R1, which rivals ChatGPT in capabilities but was trained at a fraction of the cost. While DeepSeek's achievement is impressive, OpenAI alleges that DeepSeek utilized unethical methods, specifically 'distillation,' by training R1 on data from ChatGPT. This raises concerns about intellectual property theft and potentially violates OpenAI's terms of service. The situation echoes previous controversies surrounding ChatGPT's own training data, further highlighting the ethical complexities in the rapidly evolving field of AI.

Read more »

White House Advisor Alleges DeepSeek May Have Stolen OpenAI's TechA top White House advisor suggests Chinese AI startup DeepSeek may have used OpenAI's models to train its own, sparking concerns about intellectual property theft. David Sacks, the White House's AI and crypto czar, points to a technique called 'distillation' where smaller models learn from larger, pre-existing ones. DeepSeek's R1 model exhibits capabilities similar to OpenAI's but with significantly less computing power, leading to market anxieties. Sacks calls for measures to prevent this knowledge extraction and highlights OpenAI's own legal battles over copyright infringement.

White House Advisor Alleges DeepSeek May Have Stolen OpenAI's TechA top White House advisor suggests Chinese AI startup DeepSeek may have used OpenAI's models to train its own, sparking concerns about intellectual property theft. David Sacks, the White House's AI and crypto czar, points to a technique called 'distillation' where smaller models learn from larger, pre-existing ones. DeepSeek's R1 model exhibits capabilities similar to OpenAI's but with significantly less computing power, leading to market anxieties. Sacks calls for measures to prevent this knowledge extraction and highlights OpenAI's own legal battles over copyright infringement.

Read more »

Microsoft probes if DeepSeek-linked group improperly obtained OpenAI dataOpenAI's API is the main way that software developers and business customers buy OpenAI's services.

Microsoft probes if DeepSeek-linked group improperly obtained OpenAI dataOpenAI's API is the main way that software developers and business customers buy OpenAI's services.

Read more »