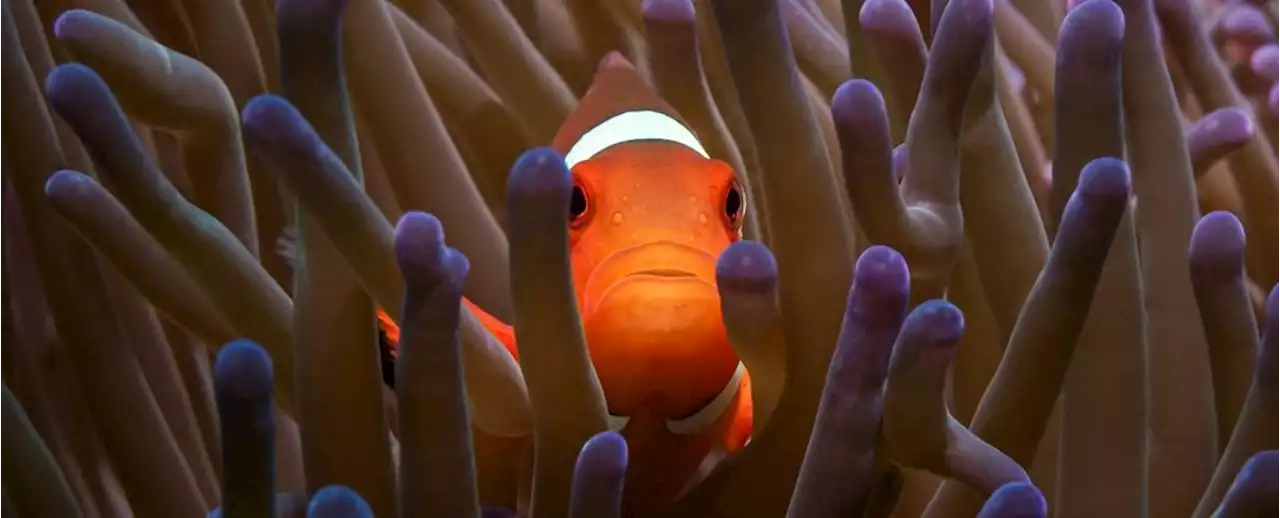

We tend to associate healthy coral reefs with their visual splendor: the vibrant array of colors and shapes that populate these beautiful underwater ecosystems.

The recordings encompassed four distinct types of reef habitat – healthy, degraded, mature restored, and newly restored – each of which exhibited a different amount of coral cover, and subsequently generated a different character of noise from aquatic creatures living and feeding in the area.

"Previously we relied on manual listening and annotation of these recordings to make reliable comparisons,""However, this is a very slow process and the size of marine soundscape databases is skyrocketing given the advent of low-cost recorders." To automate the process, the team trained a machine learning algorithm to discriminate between the different kinds of coral recordings. Subsequent tests showed the AI tool could identify reef health from audio recordings with 92 percent accuracy.co-author and marine biologist Timothy Lamont from Lancaster University in the UK.

"In many cases it's easier and cheaper to deploy an underwater hydrophone on a reef and leave it there than to have expert divers visiting the reef repeatedly to survey it – especially in remote locations.

While the human ear might not be able to easily identify such faint and hidden sounds, machines can detect the differences reliably well, it seems, although the researchers acknowledge the method can still be refined further, with greater sound sampling in the future expected to deliver