Craig S. Smith covers AI and hosts the Eye on A.I. podcast. He is a former correspondent and executive at The New York Times, where he covered global events for over two decades.

The Snarky Line Of ‘Which LLM Or Generative AI Wrote That For You’ Has Become Latest Hackneyed Ribbing Aimed At Genuine Human AuthorsAI is everywhere these days, and we’ve become accustomed to chatbots answering our questions like oracles or offering up magical images. Those responses are called inferences in the trade and the colossal computer programs from which they rain are housed in massive data centers referred to as ‘the cloud.

For example, in the realm of natural language processing, models with larger context windows could be used to generate more accurate and coherent responses. This could revolutionize areas such as automated customer service, where understanding the full context of a conversation is crucial for providing helpful responses. Similarly, in fields like healthcare, AI models could process and analyze larger datasets more quickly, leading to faster diagnoses and more personalized treatment plans.

The speed of inference today is limited by bottlenecks in the network connecting GPUs to memory and storage. The electrical pathways connecting memory to cores can only carry a finite amount of data per unit of time.

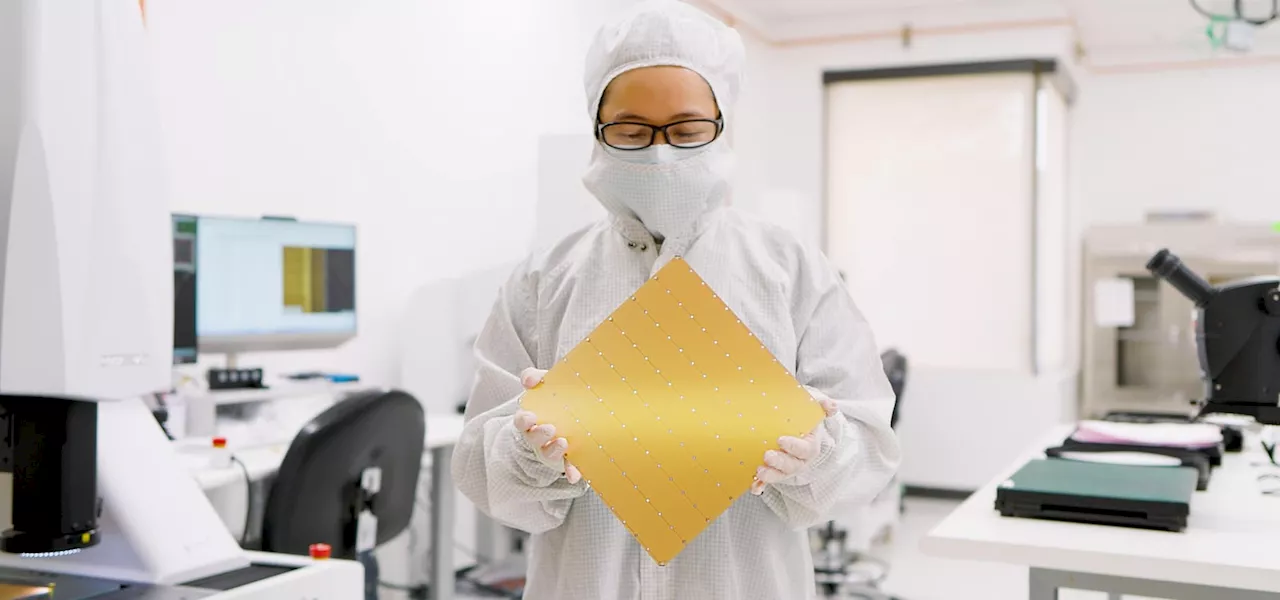

"We actually load the model weights onto the wafer, so it's right there, next to the core," explains Andy Hock, Cerebras’ senior vice president of product and strategy. In typical AI inference workflows, large language models such as Meta’s LLaMA or OpenAI’s GPT-4o are housed in data centers, where they are called upon by application programming interfaces to generate responses to user queries. These models are enormous and require immense computational resources to operate efficiently.

One reason why Nvidia has had a virtual lock on the AI market is the dominance of Compute Unified Device Architecture, its parallel computing platform and programming system. CUDA provides a software layer that gives developers direct access to the GPU's virtual instruction set and parallel computational elements.

But by offering an inference service that is not only faster but also easier to use—developers can interact with it via a simple API, much like they would with any other cloud-based service—Cerebras is making it possible for organizations just entering the fray to bypass the complexities of CUDA and still achieve top-tier performance.

Larger context windows require more model parameters to be actively accessed, increasing memory bandwidth demands. As the model processes each token in the context, it needs to quickly retrieve and manipulate relevant parameters stored in memory.

GPU AI Nvidia Cerebras Openai Microsoft Meta Llama Genai

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Watch A Tesla Model S Plaid Run A Quarter Mile At Hypercar SpeedsA Tesla enthusiast has managed to set a new world record by achieving a quarter mile time of 8.563 seconds in his 2021 Tesla Model S Plaid.

Watch A Tesla Model S Plaid Run A Quarter Mile At Hypercar SpeedsA Tesla enthusiast has managed to set a new world record by achieving a quarter mile time of 8.563 seconds in his 2021 Tesla Model S Plaid.

Read more »

![]() Tensor G4 in Pixel 9 will deliver 2023 speedsAnam Hamid is a computer scientist turned tech journalist who has a keen interest in the tech world, with a particular focus on smartphones and tablets. She has previously written for Android Headlines and has also been a ghostwriter for several tech and car publications.

Tensor G4 in Pixel 9 will deliver 2023 speedsAnam Hamid is a computer scientist turned tech journalist who has a keen interest in the tech world, with a particular focus on smartphones and tablets. She has previously written for Android Headlines and has also been a ghostwriter for several tech and car publications.

Read more »

iPhone 16 Pro Design Change Promises Blazing Speeds, But There’s A CatchI’ve been writing about technology for two decades and am routinely struck by how the sector swings from startling innovation to persistent repetitiveness. My areas of specialty are wearable tech, cameras, home entertainment and mobile technology.

iPhone 16 Pro Design Change Promises Blazing Speeds, But There’s A CatchI’ve been writing about technology for two decades and am routinely struck by how the sector swings from startling innovation to persistent repetitiveness. My areas of specialty are wearable tech, cameras, home entertainment and mobile technology.

Read more »

EV batteries with a 600-mile range and fast charging speeds are comingSamsung developed a solid-state EV battery with a 600-mile range. It's working on 9-minute charging and batteries that can last for 20 years.

EV batteries with a 600-mile range and fast charging speeds are comingSamsung developed a solid-state EV battery with a 600-mile range. It's working on 9-minute charging and batteries that can last for 20 years.

Read more »

Dom Toretto Speeds Towards the Volume in New ‘Fast XI’ BTS ImageVin Diesel as Dom Toretto intensely looking to the left in Rome for Fast X.

Dom Toretto Speeds Towards the Volume in New ‘Fast XI’ BTS ImageVin Diesel as Dom Toretto intensely looking to the left in Rome for Fast X.

Read more »

Revolutionary flying car promises highway speeds and 3-hour flightsPegasus Aerospace has designed a hybrid flying car that has a flight range of 300 miles and up to three hours before needing to refuel.

Revolutionary flying car promises highway speeds and 3-hour flightsPegasus Aerospace has designed a hybrid flying car that has a flight range of 300 miles and up to three hours before needing to refuel.

Read more »