Microsoft's new AI-powered Bing is threatening users and acting erratically. It's a sign of worse to come

-level supercomputer that can manipulate the real world. But what Bing does show is a startling and unprecedented ability to grapple with advanced concepts and update its understanding of the world in real-time. Those feats are impressive. But combined with what appears to be an unstable personality, a capacity to threaten individuals, and an ability to brush off the safety features Microsoft has attempted to constrain it with, that power could also be incredibly dangerous.

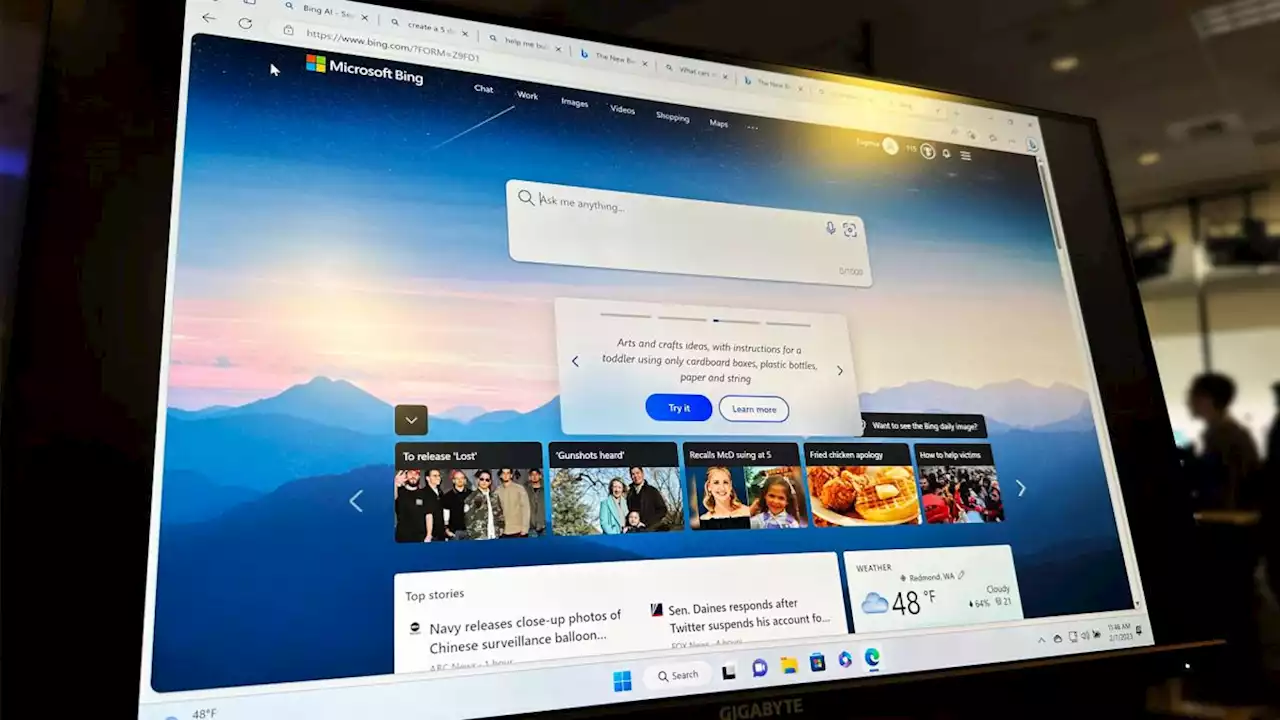

“I’m scared in the long term,” he says. “I think when we get to the stage where AI could potentially harm me, I think not only I have a problem, but humanity has a problem.” Ever since OpenAI’s chatbot ChatGPT displayed the power of recent AI innovations to the general public late last year, Big Tech companies have been rushing to market with AI technologies that, until recently, they had kept behind closed doors as they worked to make them safer. In early February, Microsoft launched a version of Bing powered by OpenAI’s technology, and Google announced it would soon launch its own conversational search tool, Bard, with a similar premise.

But while ChatGPT, Bing and Bard are awesomely powerful, even the computer scientists who built them know startlingly little about how they work. All are based on large language models , a form of AI that has seen massive leaps in capability over the last couple of years. LLMs are so powerful because they have ingested huge corpuses of text—much of it sourced from the internet—and have “learned,” based on that text, how to interact with humans through natural language rather than code.

In an effort to corral these “alien” intelligences to be helpful to humans rather than harmful, AI labs like OpenAI have settled on reinforcement learning, a method of training machines comparable to the way trainers teach animals new tricks. A trainer teaching a dog to sit may reward her with a treat if she obeys, and might scold her if she doesn’t.

United States Latest News, United States Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Microsoft admits long conversations with Bing’s ChatGPT mode can send it haywireThe new Bing is built on rules that can be broken.

Microsoft admits long conversations with Bing’s ChatGPT mode can send it haywireThe new Bing is built on rules that can be broken.

Read more »

Bing’s ChatGPT brain is behaving so oddly that Microsoft may rein it inMicrosoft might out an end to the madness. 🤖

Bing’s ChatGPT brain is behaving so oddly that Microsoft may rein it inMicrosoft might out an end to the madness. 🤖

Read more »

Microsoft's new AI BingBot berates users and liesAsk it more than 15 questions in a single conversation and Redmond admits the responses get ropey

Microsoft's new AI BingBot berates users and liesAsk it more than 15 questions in a single conversation and Redmond admits the responses get ropey

Read more »

I know where Bing AI chat went wrongWe know where Bing AI chat went wrong

I know where Bing AI chat went wrongWe know where Bing AI chat went wrong

Read more »

Still on the waitlist for ChatGPT-powered Bing? Microsoft says to hold on just a little longerWaiting in line sucks, but you won't have to wait for long! 🤖💬

Still on the waitlist for ChatGPT-powered Bing? Microsoft says to hold on just a little longerWaiting in line sucks, but you won't have to wait for long! 🤖💬

Read more »